Benchmarks

Measured on real laptops.

Every FastFlowLM release is validated on Ryzen™ AI NPUs.

We publish the results in docs/benchmarks so teams can compare apples-to-apples.

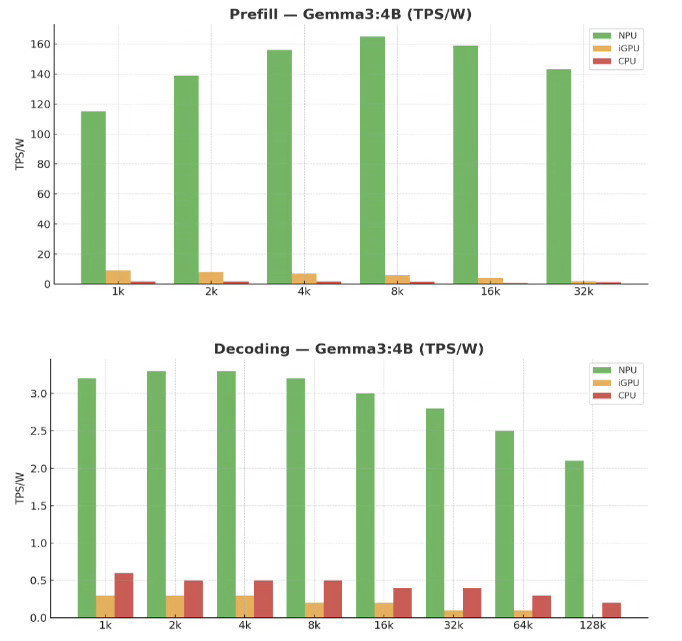

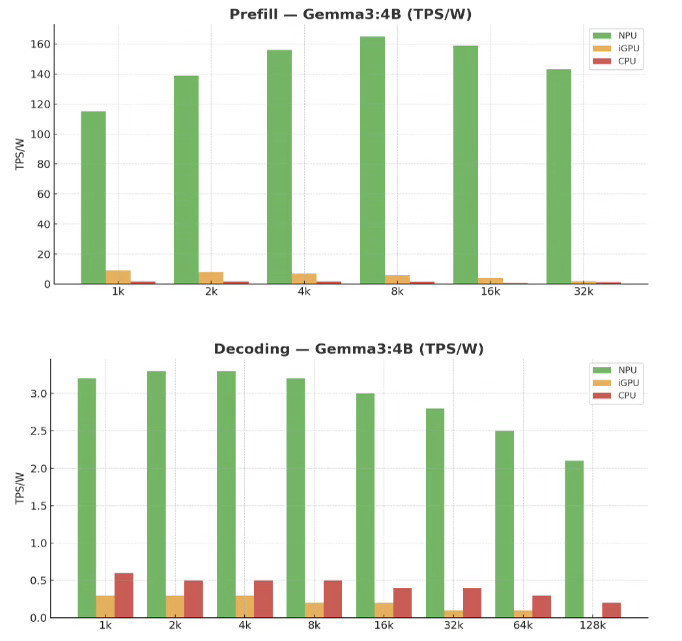

The runtime extends AMD’s native 2K context limit to 256K tokens for long-context LLMs and VLMs and, in power efficiency tests, consumes 67.2× less energy per token than the integrated GPU and 222.9× less energy per token than the CPU on the same chip while holding higher throughput.

GPT-OSS 20B

19 tps

AMD Ryzen™ AI 7 350 with 32 GB DRAM

Qwen 3 0.6B

80 tps

Prefill speed: 1,356 tps with 2K prompt

Gemma3 1B

66 tps

Prefill speed: 1,657 tps with 16K prompt

Gemma3 4B Vision

On-device power efficiency (Tokens/s/Watt or Tokens/Joule)